- Chronics

- Dec 1, 2025

- 11 min read

Economic Implications of Declining Compute Costs in AI-Driven Industries

Economic Implications of Declining Compute Costs in AI-Driven Industries

The New Economics of AI: From Scarcity to Abundance. Deep Explanation

What changed, technically and economically

Hardware growth: New generations of accelerators (GPUs, TPUs, NPUs) and more chipmakers increased production capacity. This lowers unit costs and speeds up procurement cycles for compute resources.

Software improvements: Methods like quantization (use 8-bit instead of 16/32-bit), pruning (remove unnecessary parameters), distillation (train a smaller model from a larger one), and sparse/dynamic architectures (Mixture-of-Experts) significantly cut compute costs per inference and training.

Architecture and tool enhancements: Better distributed training frameworks (Horovod, DeepSpeed, JAX/XLA optimizations), more efficient compilers, and inference caches lower redundant work and, therefore, costs.

Cloud cost benefits: Large cloud providers share infrastructure costs among many customers and price GPU instances competitively to gain market share. Spot and discount markets for spare capacity further lower marginal costs.

Economic chain reaction

Lower per-inference and per-training costs lead to more experiments and iterations. This results in faster product-market fit and feature development, which boosts productivity and reduces time to revenue.

Practical indicators (what to track)

- Cost per training epoch for key models (benchmarks)

- Cost per 1 million inferences (in production)

- Time to train from raw data to a deployable model

- Number of experimental runs per engineer each month

Example scenario

A mid-sized healthcare startup used to spend $120,000 per prototype model training cycle. With better tools and cheaper spot GPUs, that cost drops to $6,000. This change enables 20 times more prototypes per year and speeds up the discovery of effective product-market fit.

2. Democratization of AI Capabilities for SMEs, Deep Explanation

Mechanisms enabling democratization

API-first models: Providers offer model features through pay-as-you-go APIs, allowing SMEs to avoid big engineering costs.

No-code / low-code ML: Tools such as autoML and drag-and-drop model builders enable non-technical users to create models for common tasks.

Pretrained, fine-tunable models: SMEs can fine-tune smaller, more affordable base models using their own data instead of starting from scratch.

Managed services and marketplace models: Integrators, vertical SaaS, and model marketplaces provide domain logic, making it easy to adopt these solutions.

Business use cases where SMEs gain the most

Customer support automation: Use chatbots and intent classification to shorten response times and reduce staffing needs.

Demand forecasting for SMB retail: Make short-term inventory decisions with low-cost time-series models.

Localized NLP: Support customer interactions in native languages without needing to build custom NLP systems.

Automated bookkeeping and classification: Save accountant hours by using models for transaction categorization.

KPIs SMEs should monitor

Reduction in manual hours saved each month

Percentage of customer interactions resolved without human help

Inventory carrying cost reduction, in percentage

Changes in response time and Net Promoter Score (NPS) for customers

Implementation checklist for SMEs

Begin with an API-based proof-of-concept for one process, like chat automation.

Gather a small labeled dataset of 1,000 to 5,000 examples.

Fine-tune a lightweight model or use prompt engineering for large language models.

Measure accuracy, resolution rate, and cost per call; review and improve every month.

Establish guardrails, such as human oversight, for handling edge cases. Explosion of Automation Across Traditional Industries, Deep Explanation

How cheaper computing scales automation adoption

Lower inference costs lead to deploying more agents and automating more workflows, such as routing and triage.

Cheap training allows for frequent retraining to respond to seasonal and market shifts.

Affordable edge computing enables real-time automation at the device level, including robots and cameras.

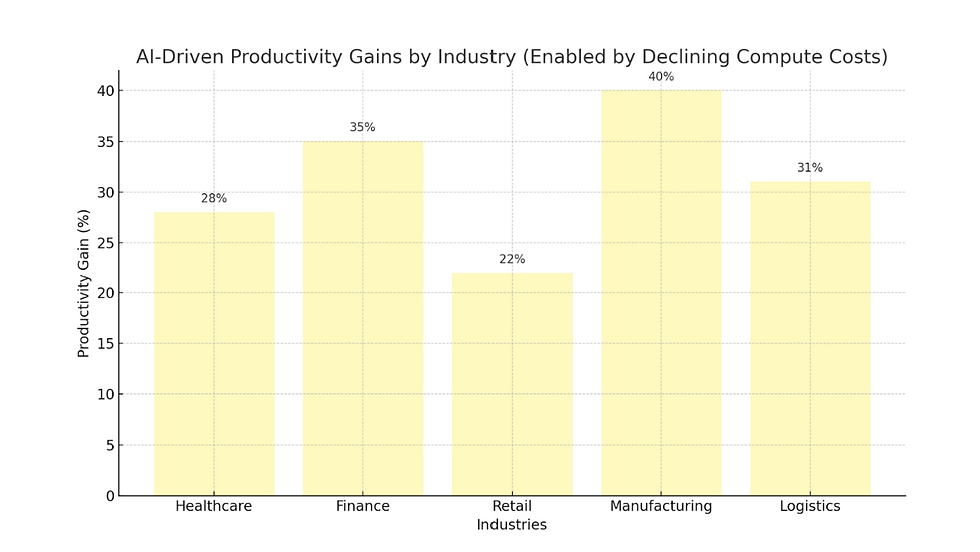

Industry-specific deep dives

Manufacturing

Use cases include predictive maintenance, visual defect detection, and dynamic line balancing.

Mechanics involve cameras and edge inference that instantly detect anomalies. The cloud re-trains models weekly with new failure modes.

Business impact results in increased uptime, longer mean time between failures (MTBF), and reduced scrap rates.

Retail

Use cases include dynamic pricing, real-time recommendations, and cashierless stores.

Mechanics involve streaming purchase intent signals that feed personalization engines to optimize offers.

Business impact leads to larger basket sizes, better conversion rates, and decreased shrinkage.

Healthcare

Use cases include automated reading of imaging studies, triage of lab results, and virtual assistants.

Mechanics involve federated and regional models that protect patient privacy while improving local accuracy.

Business impact helps reduce diagnostic bottlenecks and extends specialist reach into rural areas.

Logistics

Use cases include route optimization, ETA prediction, and freight matching.

Mechanics involve cheaper continuous optimization that enables dynamic rerouting based on real-time traffic and demand.

Business impact means fewer empty miles, lower fuel costs, and same-day or next-day capabilities.

Organizational changes required

Cross-functional automation teams that include ops, ML engineers, and domain experts.

Data pipelines for continuous retraining and monitoring.

Operational service level agreements for automated agents, including error budgets and human escalation windows.

CapEx to OpEx Transformation of AI Economics, Deep Explanation

What changes when compute is OpEx

Capital budgeting shifts, eliminating the need for multi-year depreciation schedules for GPUs. Financial planning becomes more adaptable to variable costs.

Agility improves, allowing projects to scale quickly without lengthy procurement cycles.

Pricing and monetization adapt, with companies designing products that offer usage-based tiers linked to compute consumption.

Financial mechanics and implications

There is a tradeoff between predictability and flexibility. OpEx increases variable costs, requiring forecasts to model usage volatility.

Cash flow benefits arise from lower upfront cash outflows, making it better for startups and high-growth ventures.

Accounting effects from moving from CapEx to OpEx change EBITDA and tax treatments.

Example of product redesign

A SaaS provider shifts from seat-based licenses to a hybrid model: a monthly base fee plus per-inference credits. Customers pay according to peak usage, allowing the vendor to align costs and revenues more closely.

Risk mitigation

Use reservations or commitment plans for baseline usage to lower per-unit costs.

Incorporate throttling and cost-limiting features in customer-facing products.

Implement monitoring and alerts for unusual model usage to prevent runaway invoices.

Emergence of AI-Native Business Models: Deep Explanation

Characteristics of AI-native companies

Data-first value creation: Company value comes from data assets and ongoing model improvement.

Marginal-cost advantage: Once models are trained, each additional inference has a low cost.

Outcome-driven pricing: Pricing connects to outcomes, such as accuracy, savings, and performance, instead of just access to the product.

Model archetypes enabled by cheap compute

Autonomous agents and micro-workers: Agents complete tasks, such as filling out forms or triaging leads, and are billed per task.

Real-time personalization platform: Custom micro-models for each user create highly relevant experiences.

AI-driven marketplaces: These marketplaces match supply and demand with minimal friction by using predictions at scale.

Feature-as-a-Service: Small AI tools, like speech-to-text and sentiment analysis, are sold per use.

Economics at scale

The business model supports high-frequency, low-margin transactions that generate large revenue through volume.

Cost structure: Variable costs increase with more inferences. It is essential to enhance model efficiency to keep margins healthy.

Example: AI-as-a-Service micro-utilities

A company offers an "invoice-extraction" API for $0.001 per document. At 10 million documents per month, this results in $10,000 in monthly revenue. With optimized inference pipelines and batch processing, profit margins can be strong.

Supply-Side Economics: Increased Compute Supply Leads to Lower Market Prices: Deep Explanation

Structural drivers on the supply side

Improvements in manufacturing scale: Chip factories increase capacity, shortening lead times and lowering per-unit costs.

New entrants and regional suppliers: Competition from local chipmakers puts downward pressure on prices.

Hyperscaler inventory strategies: Providers might sacrifice margin to gain market share through aggressive pricing and preemptive instances.

Market consequences

Compute behaves like a commodity: The market becomes more responsive to price changes and demand.

Price cycles: Short-term periods of excess supply are often followed by consolidation or temporary shortages, requiring companies to hedge.

Regional compute hubs: Countries and areas create clusters that benefit from lower power costs and tax incentives.

Policy and macro considerations

Strategic national spending on data center infrastructure can be a competitive advantage.

Energy and cooling expenses become significant. Sustainable computing strategies, such as improving power usage effectiveness, influence long-term availability and pricing.

Demand-Side Dynamics: AI Usage Increases When Costs Fall: Deep Explanation

Internal diffusion within firms

From pilots to full products: Lower costs eliminate the economic barriers that prevent pilots from scaling.

Cross-departmental AI adoption: Marketing, sales, HR, operations, and finance start integrating AI into their daily tasks.

Decentralized model ownership: Teams create their own simple models under specific guidelines.

How usage creates a positive loop

More usage leads to more labeled operational data, which improves models and results in better outcomes that encourage even more usage.

This positive feedback loop is strongest in areas with continuous data generation, like e-commerce, streaming, and logistics.

Governance challenge

Shadow ML: Teams use models without central IT oversight, which raises risks.

There is a need for centralized platforms and policies, such as model registries, access controls, and cost-visibility dashboards.

KPIs to measure

- Number of production models deployed by organization or unit

- Average inferences per day for each model and cost per inference

- Model performance drift and percentage of models retrained monthly

Macro-Economic Impact: AI as a GDP Accelerator, Deep Explanation

Transmission channels to GDP

Productivity channel: Automation increases output per labor hour.

Investment channel: Lower computing costs encourage private investment in AI services and startups.

Export and competitiveness channel: Companies lower costs and improve quality, allowing them to compete internationally.

Innovation channel: More research and development experiments speed up technological advances, increasing total factor productivity (TFP).

Modeling effect (conceptual)

GDP_growth_AI = α (productivity_gain) + β (AI_startup_growth) + γ * (export_gain)

where α, β, and γ are coefficients estimated through national accounts and sectoral productivity studies.

Policy implications

Supporting computing infrastructure through subsidies or tax incentives can create national advantages.

Workforce retraining programs to help workers shift into high-productivity jobs enhanced by AI are crucial.

Empirical expectations

In countries with targeted computing subsidies and talent pipelines, the GDP contribution from AI-intensive sectors can surpass that of slower legacy sectors by several percentage points over a decade.

Hyper-Localization Enabled by Cheap Compute, Deep Explanation

What hyper-localization means

Language and dialect models: Small models trained on local languages, slang, and contexts.

Regulatory and cultural nuance: Models consider local compliance, cultural norms, and business practices.

Domain-specific micro-models: Agriculture-specific models tailored to local crops, climate, and practices.

Why computing reduction matters here

Training or fine-tuning many small models, one for each region or language, becomes affordable when computing costs are low.

Commercial impacts

Greater adoption among underserved populations opens new markets.

Better user retention and product-market fit due to culturally relevant user experience (UX).

Local startups create intellectual property suited to regional challenges, such as microinsurance underwriting for smallholder farmers.

Examples of feasible products

AI-powered extension services in agriculture that use SMS or voice-based advice in local dialects.

Localized conversational commerce bots for informal markets.

City-specific traffic and logistics optimization systems.

Continuous Learning as a Competitive Moat, Deep Explanation

Continuous vs. batch training

Batch training: Retrain models periodically, such as weekly or monthly.

Continuous training: Models continuously process new data and update in near real-time, enhancing relevancy.

Benefits of continuous learning

Models react quickly to seasonality, trend changes, and adversarial inputs.

Reduced model decay leads to higher long-term accuracy.

Makes personalization adjust with user behavior.

Operational requirements

Streaming data pipelines and automated validation tests.

Strong A/B testing frameworks for live model updates.

Canary deployments and rollback systems to prevent issues during updates.

Competitive economics

Continuous learners gain performance advantages that are expensive for late entrants to mimic, thanks to data advantages and operational expertise.

Workforce Transformation & Creation of AI-Augmented Roles

Industry | Transformation | Economic Outcome |

Healthcare | AI speeds diagnostics & drug discovery | Lower treatment cost, faster diagnosis |

Retail | Personalization + automation | Higher sales, reduced inventory waste |

Logistics | Smarter routing & predictive maintenance | Lower shipping/fuel cost |

Finance | Fraud detection, credit scoring | Lower risk, faster decisions |

Manufacturing | Robots + AI quality checks | Higher output, fewer defects |

Agriculture | Prediction models for yield | Higher crop output |

Education | AI tutors | Personalized learning |

Public Sector | Smart governance & automation | Less corruption, faster services |

Shift in labor demand

Demand is rising for hybrid roles that combine domain knowledge and AI tools.

Routine roles are declining while oversight and creative roles are increasing.

New roles and core responsibilities

Prompt engineers design and optimize prompts for LLMs to ensure reliable outputs.

AI ops engineers, also known as MLOps, maintain pipelines, automate retraining, and manage deployments.

AI ethicists or compliance officers make sure models follow regulatory and ethical guidelines.

Automation supervisors are human operators who deal with edge cases and exceptions.

Skills & training strategy

Short, practical bootcamps cover MLOps fundamentals, prompt engineering, and data labeling quality control.

On-the-job rotations pair domain experts with ML practitioners.

Economic & social effects

In the short term, wage polarization may rise. Upskilling could reduce long-term inequality if policies and corporate programs are coordinated.

Productivity per worker increases, changing compensation dynamics and allowing for reinvestment into higher-value activities.

Implementation Playbook — How an Organization Should Act Now

Cost Visibility First: Set up tracking dashboards for $/inference and $/training.

Pilot with Clear KPIs: Choose 1 or 2 high-impact use cases with measurable ROI, such as reducing churn or increasing throughput.

Governance Layer: Create a model registry, cost quotas, and approval workflows to prevent excess spending.

Operationalize Continuous Learning: Begin with weekly retraining and transition to daily or streaming retraining as reliability improves.

Optimize Models: Use techniques like pruning, quantization, batching, and caching to lower inference costs.

Billing Strategy: Develop product pricing that aligns customer value with compute costs, using hybrid subscriptions and usage credits.

Talent & Culture: Hire or rotate people into AI-adjacent roles and invest in continuous training.

Sustainability Angle: Focus on energy efficiency through measures like PUE, spot instances, and model sparsity to manage costs and emissions.

Conclusion

The drop in compute costs marks more than just an economic change; it reshapes how industries think, build, and scale. When computation is cheaper, intelligence becomes more available. The divide between small innovators and large companies starts to vanish.

We see a consistent pattern across various sectors, from healthcare to logistics and energy to finance. As compute costs decrease, AI adoption speeds up, productivity increases, and new value chains develop. Industries that previously had issues with data processing, due to high costs or heavy resource demands, can now run large models in real-time. This is particularly impactful in areas where infrastructure was a hurdle.

The key insight here is strategic. Companies no longer compete based on who has the most computing power; they compete on how well they use intelligence. The change in cost drives a new type of competitive edge—not based on hardware, but on decision-making, speed, and learning.

Recommendations

Here are practical recommendations for businesses, investors, policymakers, and founders as they navigate the era of falling compute costs:

1. Rebuild workflows with an AI-first mindset.

Don’t force AI into outdated processes. Start from scratch, assuming computation and inference are cheap and easy. This opens up new layers of automation and efficiency that older systems can't support.

2. Focus on data readiness instead of compute costs.

As compute becomes less expensive, data, rather than hardware, will be the limitation. Organizations should invest in:

- cleaner datasets

- governance frameworks

- edge-to-cloud pipelines

- continuous labeling and validation loops

Data quality will be the main source of competitive edge.

3. Shift budgets from infrastructure to AI operations.

With lower compute costs, companies should reallocate funds toward:

- fine-tuning internal models

- deploying model evaluation systems

- setting up automated retraining pipelines

- creating human-in-the-loop systems

This creates a sustainable foundation for AI operations.

4. Develop hybrid compute strategies.

Combine cloud, edge, and on-prem resources based on your workload needs. As inference becomes cheaper, many companies will move real-time operations to the edge to cut down on latency and costs.

5. Invest in long-term AI cost predictions.

Falling compute prices will affect:

- product pricing

- customer acquisition costs

- gross margin

- infrastructure planning

- lifetime value estimates

Companies should adopt flexible cost models that predict cost-per-inference over the next 3 to 5 years. This helps avoid overspending on soon-to-be common capabilities.

6. Integrate automation into decision-making.

Low compute costs allow for:

- predictive routing

- self-optimizing supply chains

- dynamic pricing

- automated risk assessments

- real-time personalization

Businesses that automate their decision processes will reach levels of scale that manual systems cannot achieve.

7. View the decline in compute costs as a chance for innovation.

Teams should set aside budgets for experimental AI projects. As compute costs fall, the opportunity cost of trying new things also drops. This makes innovation quicker, cheaper, and less risky—a perfect chance to build competitive advantages.

8. Proactively prepare for workforce changes.

AI-led industries require:

- technical skills

- data understanding

- model oversight

- cross-team decision-makers

Companies need to develop their talent pipelines before automation disrupts current roles to prevent skill gaps.

Comments